AI is hot stuff right now, and I fear I’m falling into the bandwagon. As a general rule, I avoid anything trendy until it’s safely falling out of style; then and only then do I try it out. But one of my recent assignments at work has been to look into using AI to summarize research articles and see whether it can actually do a good job, which has led to me falling down numerous rabbit holes (like this video)

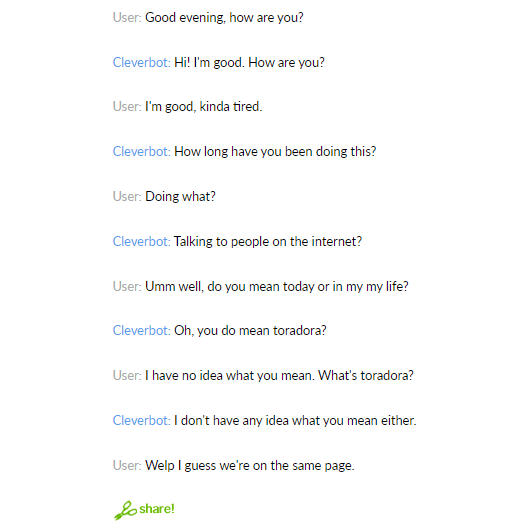

Some members of the team clearly think this is a waste of time and that AI can’t be trusted. It’s true that you have to be careful. For one, AI is only as good as the data it’s trained on. Does anyone remember Cleverbot? It’s a chatbot that, well, chats. Wikipedia says it came out in 2008, which sounds about right for the level of weird I remember being when I used it. It was fun to goof around and say weird things to see its response.

Problem is, it learned from the people it talked to, and I was not the only one trying to get it to say strange things. It quickly devolved and became very difficult to talk to.

ChatGPT and other AI tools have some safeguards in place to prevent that from happening. We’ll see how they do. I know there’s already tons of material on jailbreaking and getting ChatGPT to swear and help commit crimes.

Which I definitely have not been researching. Why would you even ask that? Instead I’ve been testing various AI tools (there are sooo many) and being impressed by how easily AI makes stuff up and makes it sound legit. Example: I fed it an article that mentioned vocabulary skills generally and the AI filled in specific skills like phonological awareness. Reading it sounded right, but that’s not what the paper actually said.

AI will also blatantly lie about word counts. I ask for a 200 word summary, it gives me 500 and tells me it is 200. The desire to be helpful and give me what I asked for overrides the need for facts.

So be careful, and know your stuff. AI can be a great tool and I’ve barely begun testing its capabilities, but it also hallucinates a TON. I personally don’t trust it to do a better job writing than I can and it often takes as much time to fact check as to just write the thing yourself.

If you do choose to use AI specifically for summarizing, I recommend using Claude. It’s super easy and from what I’ve seen so far, it’s way more accurate and natural sounding. You can also try Assistant by scite.ai, which does a good job but only has two free prompts without a paid account. You can get around that with an incognito window, but it’s just not as user friendly, at least for discussing specific papers.

And for finding sources, Elicit.org is amazing, waaaay better than a Google Scholar search. I wish I’d had it for my undergrad. Consensus is ok too, but Elicit is better.

(Sorry, I really should go through and attach links. But I’m feeling lazy, and you know how to Google, so… have at it.)

To recap: AI is cool. There’s a lot of stuff about it. It will lie in an attempt to be helpful. Also it can do this:

Hope ya’ll have a good one.

Leave a comment